The trade-off: Linking commercial process simulations with AI-based multi-objective optimisation

Assen Batchvarov

A story using Quaisr to connect multi-objective optimisation with a styrene production process example in gPROMS.

Overview

This blog is aimed at engineers and scientists who would like to learn more about multi-objective optimisation techniques. The blog explores how the Quaisr platform can be used to connect multi-objective optimisation frameworks with tools such as gPROMS. We share a practical showcase example of styrene production optimisation.

What is multi-objective optimisation?

Simply put, multi-objective optimisation aims to find the optimal solution for more than one desired objective (or goals) which are often conflicting with each other. For engineers, balancing competing targets is a day-to-day activity. Balancing competing objectives such as capital cost, operations costs, product quality and energy consumption was part of my daily life working as a process engineer.

And indeed, for many of the problems we face in engineering, no single optimal exists as the objective functions are conflicting. For example, maximising product throughput while maintaining high product quality or maximising a car's performance while minimising fuel consumption and emissions. Luckily, a solution to such problems exists as non-dominated, inferior, Pareto efficient or Pareto optimal (after the Italian civil engineer and economist Vilfredo Pareto who also popularised the term “elite”). In other words, you can’t have your cake and eat it, or better yet… You can’t have cake all the time and expect to minimise your dentist bill.

We refer to problems with up to 3 dimensions as multi-objective optimisation whereas problems with more than 3 dimensions are many-objective. For the remainder of this blog, we will only discuss multi-objective optimisation, but feel free to drop us a note if you want to know more about many-objective optimisation.

What are common ways to do multi-objective optimisation?

Single-objective optimisation is commonly used to circumvent the need to do multi-objective optimisation. Given enough apriori information, we can construct a weighted sum function. Solving single objective optimisation problems is expectedly faster and easier. But, in the case of the weighted sum method, you will need to know what weights are suitable before you cacn compute a solution. You will also need to have information on each objective's lower and upper bounds (and sometimes you just don’t have this information). Lastly, to discover all Pareto optimal solutions on the Pareto front, you will need to run the single-objective optimisation process multiple times (a common problem for epsilon-constrained and other methods which transform the multi-objective optimisation problem into a single-objective optimisation solution). Extra issues arise when the underlying solution framework to solve the different aspects of our problem becomes more complex, especially when we shift from algebraic to differential equation problems.

The truth is that the problems we face in the industry are often complex, and rarely decisions about a system are made using a single framework. In FMCG manufacturing, we have formulation modelling, quality modelling, production line modelling, supply chain modelling, and the list goes on.

What if we had a complex system? A system that was noisy, stochastic and discontinuous.

Enter…population-based metaheuristics, an approach that guides a search for an optimal solution by maintaining and improving multiple candidate solutions. Some of the more popular strategies within this optimisation field include evolutionary, genetic and particle swarm algorithms, but more on this later in the article. To understand how these methods work, let’s imagine that each solution to a problem is treated as an individual or a particle.

- At first, they are thrown at the solution space, casting a wide enough net.

- Once the performance is assessed, the algorithm moves all individuals or particles toward the optimal solution(s).

- The process repeats until convergence flattens, the diversity of the population falls (changes don’t bring better solutions), or we simply run out of a computation budget.

Of course, not only population-based approaches are capable of solving complex multi-objective engineering problems. Surrogate-based optimisation is another candidate, but more on that in another blog post.

Before we dive deeper into population based techniques, here are some useful definitions:

- Generation (or iteration for particles) - the set of candidate solutions at a specific point in optimisation time.

- Population (or particles) - refers to the set of candidate solutions that are being maintained and improved by the optimisation algorithm. To guide the search for optimal solutions the algorithm will use population or particle dynamics.

- Elites - the candidate solutions that are transferrer to the next generation without much change.

- Crowding distance - the distance between two candidate solutions. In NSGA II this is measured as the Manhatten distance.

- Diversity - a measure of the distribution of candidate solutions.

Best point to start with population-based optimisation?

A quick search and we find out that a lot of people have spent a lot of time trying to come up with new and better population-based optimisation frameworks. On one hand, that is great, on the other hand, if you are faced with a design deadline or an urgent operations issue, you are better off with a quick starter. So here is what I like to refer to as the first five you try.

The first five you try

As we know it might be overwhelming to jump into a field with 100s of options, we thought it might be useful to distil down a list of population-based algorithms for multi-objective optimisations that engineers can try out using default settings.

NSGA II & NSGA III

Non-dominant Sorting Genetic Algorithms of NSGA are evolutionary algorithms that use an elitist principle. If we treat the solutions to a problem within the optimisation framework as an individual, it is only the elites that will be given the opportunity to be carried out to the next generation. Another feature of NSGA algorithms is non-dominant sorting of the parent and offspring populations according to an ascending level of non-domination. The algorithms also make use of crowding distance assessment and sorting, which takes care of the solutions becoming too dense around a single solution location. As for the difference between NSGA II and NSGA III, the latter one is the new kid on the block and uses a set of reference directions to maintain diversity of the population, whereas the former relies on a crowding distance operator for the same function. NSGA III is also meant to be more efficient at solving multi-objective optimisation problems, but you don’t have to take our word for it, instead you can head to pymoo Python library to have a play around. Or you can contact us...

MOEA/D

Multiobjective Evolutionary Algorithm Based on Decomposition or MOEA/D decomposes the multi-objective optimisation problem into a number of scalar optimisation subproblems that are optimised simultaneously. The subproblem optimisation leverage information from neighbouring subproblems, decreasing the computational complexity in comparison to non-dominated sorting methods like NSGA. To try out MOEA/D you can once again head to pymoo or give us a shout using this email (info@quaisr.com).

MO-DE

Multi-objective Differential Evolution or MO-DE works on the same evolutionary principles with the caveat that it stands as an improved version of more classical algorithms like NSGA. Once again you don’t have to take our word for it, head to the pymooDE page for the Python implementation.

MO-PSO

We now move from talking about populations to particles. Multi-objective Particle Swarm Optimisation or MO-PSO is a popular way of doing multi-objective optimisation using a relatively low number of objective function evaluations. The core ideas behind Particle Swarm Optimisation were developed in the mid to late 90s to simulate social behaviour by borrowing ideas from the movement of organisms in fish schools and bird flocks. The algorithms use a measure of quality to iteratively improve candidate solutions by moving the particles around the search space. Head over to PyGMO page for handy Python implementation.

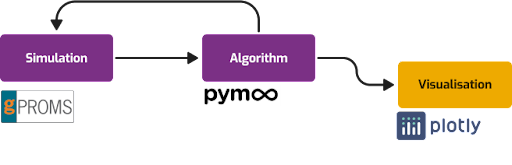

Multi-objective optimisation using Quaisr

The Quaisr connectivity platform let’s engineers and scientists accelerate the development of end-to-end workflows using multi-objective optimisation Blocks. The platform comes pre-loaded with Blocks using the five techniques discussed previously. Users are empowered to connect multi-objective optimisation Blocks to simulation and data frameworks to build scalable decision-support tools that can be passed on to wider user audience. Equally, multi-objective experts can deploy custom multi-objective optimisation Blocks letting the Quaisr platform handle the orchestration of the end-to-end Workflows. Next we explore a practical example of applying multi-objective optimisation in the context of styrene production.

Optimising Styrene objectives using gPROMS and Quaisr

Styrene monomer, commonly known as Styrene, is one of the essential building blocks of the chemical industry. In the past, styrene revolutionised the packing industry. Today, styrene is a cost-effective solution for energy-efficient building insulation, wind turbine blades and light automotive parts. Globally the market for styrene is huge. In 2021 the styrene market is estimated to be over $49 billion with production approximately 30 million tonnes.

The challenges

Thanks to a myriad of application areas, the styrene market is expected to grow to over $62 billion by 2026. But with logistical challenges brought on by COVID-19 pandemic and the ongoing effects of the Russia-Ukraine war on the energy prices, designing and operating styrene facilities is becoming a demanding task with many moving parts making it an ideal candidate for a multi-objective optimisation strategy.

How is styrene made?

Traditionally styrene is produced via the dehydrogenation of ethylbenzene. The reaction takes place over a cathalytic reactor and it is followed by a high energy consuming separation process which recycles the unreaxted ehylbenzene. Early on in design, engineers are faced with some significant decisions. The catalytic reactor is not cheap, but optimising for a smaller and less expensive catalytic reactor will lead to an energy consuming (and expensive) separation process. Hmmm… A daunting task, but nothing that an engineer can’t handle. Tools like gPROMS allow engineers to flowsheet the process and perform design optimisation using mixed integer linear programming optimisation techniques. These kind of problems are the bread and butter of process engineering. However, if you are not a chemical engineer and you want to get familiar with this process or if you just want to reminisce you can check out the detailed styrene example from PSE here.

Why use population- or particle-based multi-objective optimisation? The reality, nowadays, is that a good enough solution is a complex web of interconnected parts. Process modelling tools such as gPROMS Process will greatly benefit from connectivity to systems like supply chain. Connecting different frameworks performing different computations makes it hard to describe the problem as a single system of equations to be optimised using optimisation techniques such as mixed integer linear programming. It is no longer one solution framework which we need to optimise, it is a system of frameworks, making population- and particle-based multi-objective optimisation a great solution framework.

Making fast decision about competing objectives

In theory, engineers are well equipped with the knowledge and the skill required to create complex decision pipelines.

In practice, engineers in large organisations are constantly battling with a huge backlog of decisions. If you don’t believe me, just check your Inbox for all the operations, design or R&D requests that are coming in as you are reading this blog. The backlog keeps growing. Here are some reasons why:

- You don’t have a reliable way to keep track of past decisions.

- You don’t have the time to experiment with new techniques that can improve and speed up decision making.

- You rely on a software engineering team to connect systems and tools, but they are equally facing a similar monstrous backlog issues.

Don’t let any of these reasons hold you back. Using Quaisr you can reliably create, connect and consolidate your decision making processes. Here is how we did it for the styrene plant example.

Step 1. Automate gPROMS and all your other favourite tools.

SimOps is the framework that Quaisr is pioneering to help you manage the lifecycle of your simulations. Quaisr lets you create a Block of any simulation. All you will need to do is declare the simulation inputs, outputs and the starting point of the simulation. In the gPROMS case, Quaisr has already created a Block that can run any case exported from gPROMS Process. Quaisr will track and create audit trails of your runs. Quaisr will allow you to easily connect your gPROMS block to other frameworks. You are now free to experiment.

Step 2. Connect and experiment

Connect your gPROMS simulation Block to the multi-objective optimisation Block. Drag on the visualisation blocks that will help you build the decision making graphs (Paretto comes to the rescue in the case for styrene). Do it collaboratively, with the peace of mind that all of your actions will be tracked. And most importantly you don’t have to worry about navigating compute infrastructure and data exchange between the Blocks. Quaisr has you covered!

Step 3. Create Apps and share with decision makers

Using simple drag-and-drop you can prepare Apps to be shared with the wider organisations. Share your decision making workflows and empower your colleagues. Watch that backlog disappear as users are empowered to drive the decision making process using the tools created by experts.

Key takeaways

- Quaisr empowers engineers to focus on the development of better simulations and models.

- Quaisr lets users connect different frameworks to quickly experiment with new decision making pipelines.

- Quaisr saves time and money by letting engineers share their knowledge across the whole organisation.